On November 4, I am going to present at Italy's OWASP Day E-Gov 09 OWASP (Open web Security Project) and CONSIP (a company of the Italian Department of Economy and Finance)security conference on the topic of software security initiatives. In my presentation , I am going to address first the pre-requisites for the software security initiative:

- Compliance with information security standards (e.g. PCI DSS);

- Education and awareness on root causes of vulnerabilities in applications/software;

- Software security engineering benchmarking using a software security maturity model;

- Business cases to justify budget and investments in software security.

Since the initial cases for software security initiatives are often made for the senior management (the sponsors of the initiative), it is important to make the appropriate business cases and use the so called "drivers" for software security adoption such as executive level reports from Gartner, Forrester as well as public research on software security from NIST, SEI, DHS. Examples of good resources include NIST research on the causes of vulnerabilities and on the economics of in-secure software and Gartner press releases on economic impact of software security.

The next step is to assess the organization's secure software engineering processes and capabilities using a standard such as a Software Security Maturity Model (SSMM): the objective is to make the sponsors of the initiative aware of the organization's capabilities in secure software engineering, risk management, governance and training

The recently (2009) published Build Security In Maturity Model (BSIMM) from Dr. Gary McGraw and the Software Assurance Maturity Model (SAMM) from Mr. Pravir Chandra can help organizations in the assessment, planning and implementation of software security initiatives. These models are explictly designed for software security assurance and are based upon real data (surveys) from companies that actually had enacted and implemented software security initiatives. The models are organized along similar domains (e.g. governance, intelligence, SSDL touchpoints, deployment for BSIMM and governance, construction, verification, deployment for SAMM) each domain has three best practices and three levels of maturity. BSIMM's 12 best practices have a total of 110 sofwtare security activities and maturity levels that can be achieved by assigning goals and objectives to each activity.

A traditional maturity model such as the Capability Maturity Model (CMM) can also be mapped to levels of software assurance even if is primarly designed to assess maturity for software quality assurance, engineering and other organization domains. More specifically for the security domain, the System Security Engineering Capability Maturity Model (SSE-CMM) addresses maturity of security systems as a whole not software security in particular but based upon my previous professional experience on the System Security Engineering-Capability Maturity Model (SSE-CMM), it is possible to map software security activities to CMM maturity levels and provide a roadmap for software security maturity.

For example it is possible to map software security from the initial (level 1) to optimized (level 5) via repeatable (level 2), defined (level 3) and managed (level 4) levels of software security assurance. The mapping of software security activities for each level need to include main security domains such as:

- Software Risk Analysis & Management

- Software Security Engineering

- Security Assessment Processes and Tools

- Security Training & Awareness.

In my presentation, I provide the mapping of CMM maturity levels to software security processes starting from security testing (in BSIMM this domain is referred as SSDL touchpoints domain and in SAMM as verification business function) since for most organizations the evolution toward software security starts from application security assessments such as web application pen testing and then evolves to secure code analysis, threat modeling as well as other supporting best practices such as metrics and measurements, risks management, software security training and awareness.

One fundamental element of any maturity model is the definition of the software security roadmap that provides the set of standard activities that bring an organization to a certain capability level in software security that can measured both qualitatively and quantitatively.

For example an organization can start at CMM Level 1 (Initial) with a catch and patch approach, move to CMM Level 2 (repeteable but reactive) by ethical hacking existing applications.

An organizaiton can reach CMM Level 3 (defined and proactive) by defining a security testing process such as vulnerability assessment as part of the SDLC that is adopted for security assessing vulnerabilities for each web application project at the organization level.

At level CMM 4 (managed) organizations are capable to risk manage projects with checkpoints in all the SDLC phases (e.g. asserting security by design, development and deployment) and by using vulnerability metrics to make informed risk management decision at each checkpoint.

|

| Software Assurance Maturity Curve And CMM Levels |

At level CMM 5 (optimized), organizations have optimized software security processes for increased return of security investment, security cost savings and improved risk mitigation/reduction.

One essential factor for achieving maturity is understanding the concept of the maturity curve: this is similar to the learning curve to mature in knowledge and skills: a maturity curve shows that time is needed to acquire maturity. Since the maturity level curve provides the time frame for reaching software security maturity, it helps planning and set up the right expectations to management and also factor the costs.

For example, according to the maturity curve, the effort required to an organization for passing from CMM level 3.5 to 4 is the highest hence only few large organization can afford the cost that is required.

This costly step coincides from proactively define a software security process and manage it thought the SDLC for each product at organization wide level. From the time perspective for example software security processes are not acquired and assimilated overnight but over the course of several years especially when the sofwtare security initiative impacts several business units with several different SDLCs as well as hundreds of web applications to risk manage.

One of the factors most critical to the success of software security initiatives are the metrics and measurements: only by the definition of what we should measure, where and how it will be possible to manage software security risks and assess the organization maturity in acquisition and assimilation of software security best practices.

The essential software security metrics for a successfull software security include process and vulnerability management metrics, vulnerability root cause analysis, governance and the risk analysis.

On November 5, I will be in Milan to present at Italy OWASP Day 4 on the business cases to justify investments on software security initiatives such as the spending in software security: the goal of this presentation is help security managers in answering questions from senior management such as how to justify the security budget such as why we should spend money for software security, how much we should spend and where.

In a nutshell this means quantify the business case for software security in terms of security costs such as cost vs. benefit analysis, assumption costs vs. failure costs, quantitative risk analysis and Return of Security Investments (ROSI).

The key in the analysis is to be able to estimate the software security failure costs such as the ones due to business impact deriving from a data breach or fraud. For most organizations this is a daunting task because these data are not available, hence I am suggesting an approach that uses public sources to estimate such costs such as reported data breaches from FTC (Federal Trade Commission), data loss incident data from datalossdb.org and correlation of incidents to vulnerabilities from the Web Hacking Incident Database (WHID).

The key in the analysis is to be able to estimate the software security failure costs such as the ones due to business impact deriving from a data breach or fraud. For most organizations this is a daunting task because these data are not available, hence I am suggesting an approach that uses public sources to estimate such costs such as reported data breaches from FTC (Federal Trade Commission), data loss incident data from datalossdb.org and correlation of incidents to vulnerabilities from the Web Hacking Incident Database (WHID).

One of the factors most critical to the success of software security initiatives are the metrics and measurements: only by the definition of what we should measure, where and how it will be possible to manage software security risks and assess the organization maturity in acquisition and assimilation of software security best practices.

The essential software security metrics for a successfull software security include process and vulnerability management metrics, vulnerability root cause analysis, governance and the risk analysis.

On November 5, I will be in Milan to present at Italy OWASP Day 4 on the business cases to justify investments on software security initiatives such as the spending in software security: the goal of this presentation is help security managers in answering questions from senior management such as how to justify the security budget such as why we should spend money for software security, how much we should spend and where.

In a nutshell this means quantify the business case for software security in terms of security costs such as cost vs. benefit analysis, assumption costs vs. failure costs, quantitative risk analysis and Return of Security Investments (ROSI).

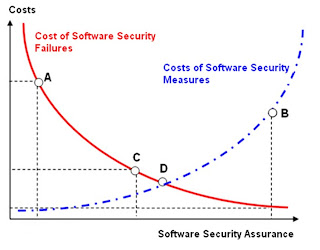

Quantifying failure costs is essential for determining the benefits of security initiatives so can be justified using cost vs. benefit analysis. In the presentation I show how assumption costs (cost that your organization assume for software security initiatives) correlate to failure costs (costs that the organization incur because of insecure software) to an increased level of software security assurance: the objective is to justify an investment in software security by monetize the security costs.

Some studies that use cost vs benefit analysis show that when the cost of a security investment is around an optimal value of 30-40% of the overall failure costs, the security cost can be justified.

This can be desumed by optimizing the overall costs when factoring the cost of security failures and the cost of security measures.

|

| Optimized Software Security Costs |

This can be desumed by optimizing the overall costs when factoring the cost of security failures and the cost of security measures.

Another method for justifying software security costs consists on using quantitative risk analysis. Quantitative risk analysis allows to estimate the annualized impact of loss such as the one due to a cause of insecure software such as SQL injection. For example it is possible to calculate a rough estimate of ALE (Annual Loss Expectancy) for a SQL Injection attack by calculating the probability of such attack occurring based upon data of the reported incidents. As data loss from FTC (US Federal Trade Commission) show that the probability of a company incurring in a data loss of PII is about 4.5% the probability of a data loss due to web channel and because of SQL injection is about 2.5%. The business impact can be quantified in terms of FTC estimate for each record of a data loss to be about 655 $/per lost record and multiplied by the number of records that can be potentially be lost to estimate the overall asset value that can be lost. This value can be multiplied by the probability of the loss to calculate the liabitility for the company. According to FTC data, in the case of a generic data loss for example (e.g. probability of 4.5%) the liability for a company of each PII record loss is about 35 $/record.

Another security cost analysis method is to evaluate the cost savings to the company by the introduction of the software security initiative by calculating the ROSI (Return Of Security Investment) of software security. I will refer to previous studies (Soo Hoo IBM study) that use ROSI to justify investment for software security activities as well as to use a standard ROSI formula (SonnenBerg) and previously computed ALE (Annualized Loss Expectancy) to determine the ROSI. According to Soo Hoo study it is shown that comparing software security assessments such as threat modeling, source code analysis and pen test, threat modeling provides the highest return of the investment since is done earlier in the SDLC: is you spend $ 100 K in a software security initiative, 21% will be saved if you adopt threat modeling for example.

Another security cost analysis method is to evaluate the cost savings to the company by the introduction of the software security initiative by calculating the ROSI (Return Of Security Investment) of software security. I will refer to previous studies (Soo Hoo IBM study) that use ROSI to justify investment for software security activities as well as to use a standard ROSI formula (SonnenBerg) and previously computed ALE (Annualized Loss Expectancy) to determine the ROSI. According to Soo Hoo study it is shown that comparing software security assessments such as threat modeling, source code analysis and pen test, threat modeling provides the highest return of the investment since is done earlier in the SDLC: is you spend $ 100 K in a software security initiative, 21% will be saved if you adopt threat modeling for example.

Finally, I will cover the dashboard metrics can be used in support the business cases to different shareholders in an organization. As the business case is initially made with estimated engineering and security data, it needs to be supported with measurements on the fields. This dashboard metrics need to show management that the software security initiative provides value to the shareholders and that is aligned with other company goals and values such as financial value for the company, value for the company customers, value for the internal business processes and value for learning and growth.

No comments:

Post a Comment